Top Videos

Germany's Scholz urges Europe to do more for Ukraine as UK PM ups defence spending

euronews (in English)

A meeting between the British and German leaders made for a show of unity even as disagreements on how to help Ukraine persist.

Taylor Swift fans descend on London pub name-checked on album

FRANCE 24 English

Italy law allows pro-life activists into abortion clinics

FRANCE 24 English

Arizona indicts Trump aides over 2020 election scheme

Deutsche Welle

US Supreme Court to hear Trump immunity claim

FRANCE 24 English

Congress Sends Biden a Bill That Could Ban TikTok

Wibbitz Top Stories

Iran's attack on Israel has muted impact on oil markets

Deutsche Welle

Israel Can Still Drag The US Into War With Iran – OpEd

Eurasia Review

Watching US Fascism In Action From China – OpEd

Eurasia Review

Advertisement

New EU cybersecurity rules push carmakers to shun old models

Deutsche Welle

EU launches probe into China's public procurement of medical devices

euronews (in English)

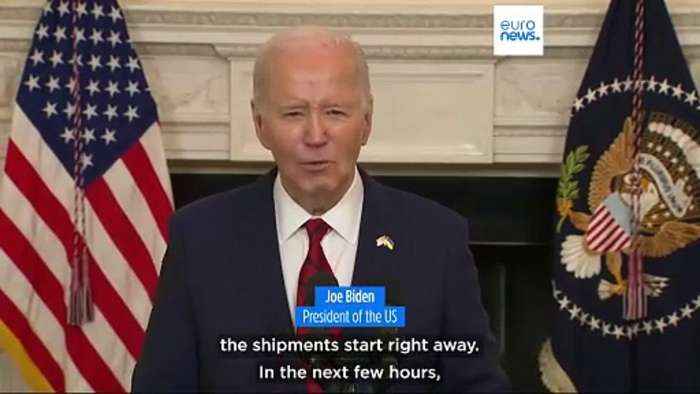

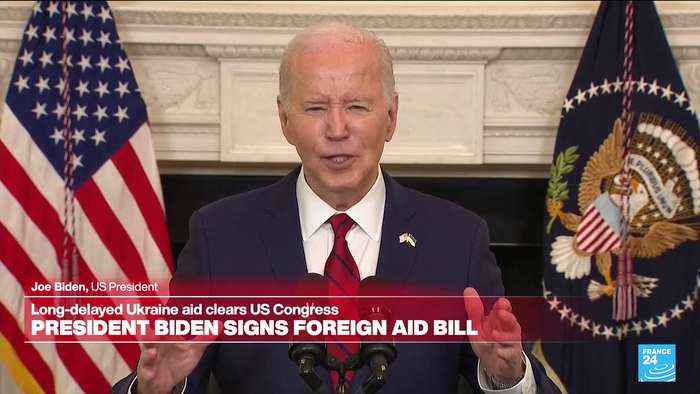

US signs off on more military help for Ukraine

Sydney Morning Herald

What's behind China's gold-buying spree?

Deutsche Welle